Disclaimer: This article is for educational and research purposes only.

ChatGPT has been shown to produce very humanlike content for article generation. One issue with using the AI tool for this task is the lack of knowledge for the information might be coming from. It cannot provide citations for the information and can sometimes be unreliable in the quality of the information provided. In this project I attempt to creatively solve this issue.

OpenAI’s ChatGPT

Let’s begin by briefly explaining a high-level view of what ChatGPT is. ChatGPT is a variant of the GPT (Generative Pretrained Transformer) series, developed by OpenAI. It’s a state-of-the-art language model that excels in understanding and generating human-like text. At its core, ChatGPT is designed to simulate human conversation in a coherent and contextually relevant manner. This capability makes it an invaluable tool for a wide range of applications, from customer service chatbots to creative writing assistance.

It is an important distinction to recognize that the model does not ‘know’ what it is being asked. One of the fundamental aspects of ChatGPT is its nature as a predictive model. Essentially, ChatGPT operates by predicting the next word in a sequence of words. This process is based on the vast amount of text data it was trained on. Each time ChatGPT generates a response, it’s essentially calculating the probabilities of what the next word should be, given the input it has received. This approach allows it to construct sentences, paragraphs, and even entire articles that are coherent and contextually appropriate.

From a data science perspective, ChatGPT can be viewed as an advanced form of a prediction model. It leverages deep learning, specifically a form of neural network known as a transformer, to analyze patterns in text data. By recognizing these patterns, ChatGPT can produce outputs that are not only contextually accurate but also nuanced and sophisticated. This predictive capability is what sets ChatGPT apart in the field of natural language processing (NLP). I should note that there are other large language models that have been built to perform similar functions as OpenAI’s ChatGPT. Some of these are open-sourced and can be downloaded.

Adapting the Project Across Industries

Before I move onto the specific project I created, I want to very briefly explain how similar projects could be made using the same techniques. Since creating an article might not be the best use case for everyone. For example:

- An e-commerce company wants to improve its customer service by providing quick, accurate, and personalized responses to customer inquiries. The ChatGPT model can be trained using the company’s product catalogs, FAQs, and customer service transcripts.

- A law firm aims to streamline the analysis of legal documents and case precedents to assist lawyers in preparing for cases. In this scenario, the code can be adapted to analyze legal documents, court rulings, and case studies.

- A corporation requires efficient generation of financial reports that analyze various aspects of the business. The ChatGPT model can be trained on financial terminology and company-specific data. It’s combined with a similarity search to pull data from financial statements, market reports, and internal financial analyses.

- Lastly, perhaps we want to use a similar method for educational content. The code can be adapted to use educational materials as its database. ChatGPT, guided by PM logic, can generate educational content like lesson plans, quizzes, and explanatory texts. FAISS can help locate relevant educational resources and examples.

Leveraging ChatGPT and Similarity Algorithms in Article Writing

Why should we make use of similarity algorithms in article writing, when ChatGPT has shown it can (for the most part) generate reliable information?

ChatGPT is trained on a vast corpus of textual data sourced from diverse materials available on the internet. This training process involves the model learning patterns, styles, and information from these texts without retaining direct links to the original sources. As a result, while ChatGPT can generate content that is informed by the data it was trained on, it cannot pinpoint and cite specific sources for the information it provides. This is a fundamental limitation arising from the way large language models like ChatGPT are trained.

It should be understood that the model’s responses are amalgamations of learned information, not direct quotes or paraphrases from specific texts. This means that even if prompted to provide a citation for the information it provides (unless using the newer web search capabilities of GPT-4-turbo), the model does not have a specific citation for the information.

This is where similarity algorithms become crucial. They can be employed to scan a database of documents to find and suggest sources that closely align with the prompt provided by a user. By integrating these algorithms, along with custom instructions to the model, writers can be confident where the information provided is coming from.

Using FAISS for Similarity Searches

FAISS (Facebook AI Similarity Search) is an efficient library developed by Facebook’s AI Research team for performing similarity search and clustering of dense vectors. In this project, I make use of FAISS embeddings to perform similarity searches within my source documents.

At the heart of FAISS is the concept of vector space. It converts data (like text or images) into numerical vectors using embedding techniques. These vectors represent the features of the data in a multi-dimensional space. The primary function of FAISS is to facilitate the quick retrieval of data points that are similar to a given query point in this vector space. It does this by comparing the distance (like Euclidean distance or cosine similarity) between the query vector and the dataset vectors. The closest vectors – those with the smallest distances – are considered the most similar to the query.

Now that we have briefly gone over ChatGPT and FAISS, let’s move onto the actual project.

Overview of the Project

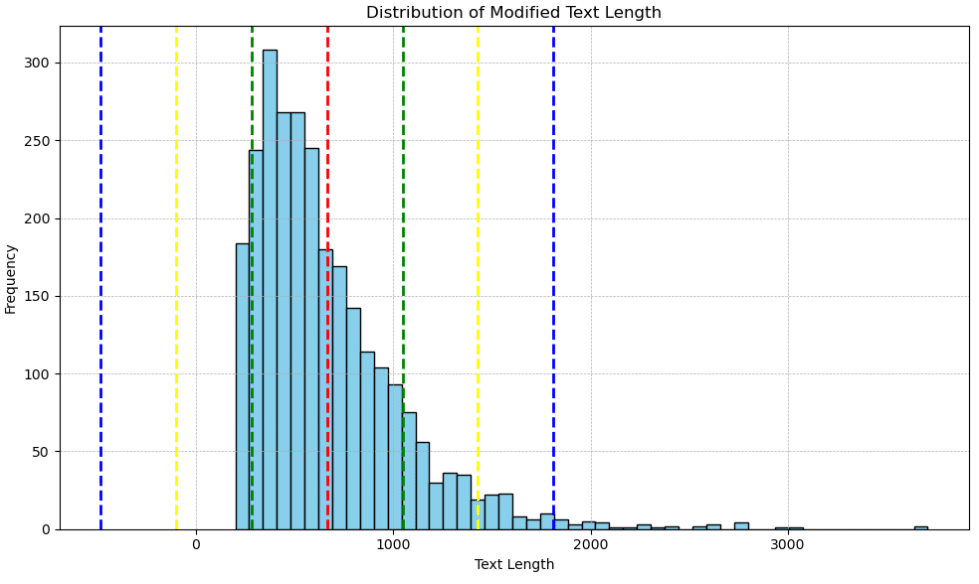

In this project, our overall goal is to create a method of article generation using ChatGPT. Considering the limitation of citations when using the model, I wanted to create a way to have ChatGPT write an article using only specific source context, labeled with its citation. Through my own experimentation with ChatGPT, and similar models, I found that it tends to work best when it is guided through content generation, rather than asking for the entire article in a single prompt. This can be due to token limits placed on the models for both prompting and content generation. Think of tokens as a type of currency, which is based on the length of the prompt and generated text. We can simplistically think of one token as three characters in text for ChatGPT models.

Due to this, the output is typically confined by the number of tokens allowed by a request. The generated content will attempt to fit the token limits, thus reducing specificity of the generated output. If we break the article up into sections, each section can be given greater specificity since it can make use of the full token limit for each prompt/answer pair. Another consideration is that the source paragraphs that are provided to the model also count against the token limits. These paragraphs are considered ‘context’ and are appended to the prompt.

Another aspect of this project is the use of multiple instances of ChatGPT, which ‘talk’ to each other for the completion of the task. By creating one instance to ‘manage’ the project and another for content drafting, I can automate the entire article.

Source Documents for FAISS Embeddings

For this project I selected documents (for educational and research purposes), that cover various aspects of Data Science and included citations. After cleaning the documents to remove everything except the textual content and splitting the text by paragraphs, we ended up with over 1,000 distinct paragraphs, with paired citations for where the information originally came from.

The first task is to train the article generator by creating the FAISS index using OpenAI Embeddings. This index will be used for the similarity search.

Project Management and Article Generation

After training on the document source paragraphs, we created two instances of ChatGPT using python and the OpenAI API. One instance will represent a Project Manager. The other will be a Writing Assistant. The project manager’s task is to keep the content generation moving forward towards completion, as well as revise content drafts to seamlessly fit into the overall article. The writing assistant writes the initial drafts of each section. Each of these instances are provided their own custom instructions on how to format and create output. These instructions are used to confine the chatbot instances to using only the information supplied to it from our source documents. This division of labor between the instances of the chatbot mimics that of an actual team, producing higher quality content.

PM Logic

The PM logic in my code is designed to orchestrate and oversee the content creation process. This instance of ChatGPT serves as a project manager, guiding the structure and development of the article. It starts by generating an outline for each section, ensuring that the content adheres to a predefined structure which will be used by the second instance. Each section is then provided, sequentially, to the writing assistant as a specific task. This logic is iterative and dynamic; it not only initiates content generation for new sections but also revises each section after the writing assistant generates the initial draft. The revision process involves refining the content for coherence, eliminating redundancy, and integrating feedback. This ensures that each part of the article flows logically into the next and maintains a consistent quality and style throughout.

The PM() function in the code is critical for this role, alternating between content generation and content refinement tasks, thereby acting as the backbone of the project management aspect of the article creation process.

The Writing Assistant

The Writing Assistant is tasked with the actual content generation. This ChatGPT instance takes the instructions provided by the PM logic and creates the initial drafts of the article sections. It leverages the model’s language generation capabilities to produce content that is not only relevant to the outlined topics but also written in a coherent and engaging manner. Additionally, the ‘onMessage’ function in the code facilitates the Writing Assistant’s interaction with the FAISS-based similarity search. This enables the Assistant to write the article section with contextually relevant information, drawing from the database of paragraphs to find content that aligns with the article’s subject matter. The FAISS indexes have been paired with the citations for the source documents. This allows the Writing Assistant to not only use the context data, but to also apply the appropriate citations to the information.

The Writing Assistant’s role is crucial in transforming the structured outline into a fleshed-out, informative article, making the most of ChatGPT’s ability to generate diverse and sophisticated text.

Important Considerations

I have found, as have other researchers, that when using models such as these for content generation it is vitally important to manually review the generated output. Not only can these models sometimes behave unpredictably and disregard the specific instructions they were given, but they can sometimes input incorrect information. They may also exacerbate bias that might be found within the source documents.

So how did the ChatGPT article generation perform?

Here is a brief example of some output provided by this article generator (limited by the total number of tokens I could use for the combined prompt/answer pairs):

Section Title: Introduction to Ordinary Least Squares Regression Ordinary Least Squares

(OLS) regression stands as a cornerstone statistical method for modeling and interpreting the relationships between variables. Essential to its appeal is the minimization of the sum of squared deviations, which mathematically determines the best-fit line for the data in question [Kuhn & Johnson, 2013]. By doing so, OLS regression helps predict outcomes and explore variable associations with clarity. Diverse fields benefit from the application of OLS regression. Economists employ it to forecast financial trends, engineers to refine processes, and policy analysts to evaluate the effects of legislative changes. The method’s ubiquity is a testament to its accessibility and the intuitive insights it offers into data relationships [Kuhn & Johnson, 2013].

Underpinning OLS regression are several critical assumptions about data, such as linearity, independence, and homoscedasticity. These ensure the method’s outputs are both reliable and valid. A deeper understanding of these assumptions will be provided later in this series, setting the stage for a comprehensive grasp of OLS regression. To encapsulate, OLS regression’s blend of simplicity and analytical power secures its status as an indispensable instrument in the statistical toolbox, wielded by specialists across countless disciplines to illuminate the patterns hidden within data.

Section Title: Historical Perspective of OLS Regression The origins of Ordinary Least Squares

(OLS) regression trace back to the work of luminaries such as Carl Friedrich Gauss and Adrien-Marie Legendre in the early 1800s. While Legendre introduced the method in 1805, Gauss published his influential work in 1809, igniting a debate over the attribution of the technique’s discovery. Both scholars, however, undeniably played pivotal roles in the development of OLS regression as they applied it to astronomical data, laying the groundwork for its future use [Rafferty, 2021]. As the discipline of statistics grew, so did the applications of OLS regression. By the turn of the 20th century, it had firmly established itself as an essential tool for statistical analysis, its use expanding beyond astronomy to fields as diverse as biology, economics, and social sciences. The introduction of computers in the 20th century further revolutionized OLS regression, enabling the analysis of complex and voluminous datasets with greater speed and precision. This technological advancement cemented OLS regression’s place as a critical instrument for researchers to decode intricate variable interactions [Rafferty, 2021].

The story of OLS regression is a testament to the enduring influence of its early pioneers and the subsequent generations of statisticians who have continued to refine the method. Today, OLS regression remains a cornerstone of statistical analysis across various disciplines, its historical significance matched only by its ongoing utility.

References:

Kuhn, M., & Johnson, K. (2013). Applied Predictive Modeling (Vol. 26, p. 13). New York: Springer.

Rafferty, G. (2021). Forecasting Time Series Data with Facebook Prophet: Build, improve, and optimize time series forecasting models using the advanced forecasting tool. Packt Publishing Ltd.

ARIMA, ARDL, and ARMA:

ARIMA, ARDL, and ARMA: